As data collection and analytics has grown exponentially in scale and scope, protecting individual privacy in large datasets has become an increasingly important issue. With vast amounts of sensitive personal information now being generated and aggregated digitally on a daily basis, ensuring privacy safeguards are paramount for responsible data stewardship and maintaining public trust. This is where the technique of data anonymization plays a pivotal role by enabling organizations to leverage data assets for valuable insights while also respecting privacy rights and regulatory compliance.

In this article, we will explore the process of data anonymization in depth, covering real-world examples, methods to anonymize data, as well as best practices and challenges. The goal is to provide a comprehensive yet accessible overview of how anonymization enables privacy-protective uses of data at scale.

What is Data Anonymization?

To understand data anonymization, it's helpful to first define what is meant by personal or sensitive data. This refers to any information relating to an identified or identifiable living individual, known as Personally Identifiable Information (PII). Common examples include names, addresses, dates of birth, ID numbers, locations, biometric data, financial details and medical records.

When individuals share their personal data, there is an implicit trust and social contract that it will be handled responsibly and solely for the purposes for which it was provided. However, as datasets grow exponentially in size and complexity, the risk of re-identification also increases - where data ostensibly stripped of identifiers could still be pieced together to trace back to a specific person.

This is where data anonymization comes in. In simple terms, it is the process of either removing or obscuring identifiers in a dataset that could reasonably be used by itself or in combination with other data sources available to identify an individual to whom the data pertains.

The goal is to transform personal data into an anonymous form ensuring it cannot be linked back to a particular individual, while still retaining its analytical richness for valuable and important uses like medical research, product development, fraud detection and more. This walks the delicate tightrope between empowering data-driven innovation and safeguarding individual privacy rights and expectations.

Real-World Examples of Anonymized Data

To help illustrate how anonymization works in practice, here are some common real-world examples where sensitive personal data is anonymized:

- Healthcare: Patient medical records, diagnoses, treatments and outcomes are anonymized in clinical research datasets by removing names, dates of birth, full addresses and other directly identifying fields. Unique IDs are used to link records while preserving anonymity.

- Finance: Financial transactions, account details and consumer behavior data are anonymized with techniques like generalized cell suppression before being provided to regulatory bodies for analysis and oversight without compromising privacy.

- Smart devices: Usage data collected from internet-connected devices like smart home appliances, fitness trackers and transportation are anonymized to remove personal identifiers that could identify device owners yet still enable product improvements and aggregated analytics.

- Social media: Platforms share aggregated and anonymized user profile data (removing names, profile photos etc.) with advertisers and analysts to gain insights into demographics, preferences and trends while ensuring individual accounts remain private.

In each example, real identities are protected through anonymization allowing beneficial downstream uses, while allaying privacy concerns that could hinder data sharing and innovation if left unaddressed.

Methods for Anonymizing Data

There are various technical methods employed for anonymizing datasets while attempting to balance privacy, utility and feasibility. The most common include:

-

Data Masking

Data masking is commonly used to anonymize sensitive personal information such as names, addresses, national ID numbers, credit card numbers etc. In data masking, the identifying values are simply removed from the dataset and replaced with generic placeholders like 'Name', 'Address', sequential numbers etc. or substitute non-sensitive values. In some cases, the values may also be encrypted.

Data masking is generally applied before data is shared with third parties, stored permanently or used for testing purposes. It allows protecting sensitive data while retaining the format and ability to perform certain analyses on the masked data. However, since the actual values are removed, the utility is reduced for any analysis requiring those specific values.

Data masking is often used by financial, healthcare and government organizations to anonymize files containing personal customer or citizen information before sharing them with external vendors for processing or using non-live data for development and testing. It reduces privacy risks by removing direct identifiers from the data.

-

Generalization

Generalization involves broadening the level of detail or granularity of quasi-identifier attributes in the data such as dates, locations etc. that could potentially be linked or combined with other sources to re-identify individuals.

For example, a date of birth attribute may be generalized to just display the year of birth rather than the full date. Locations can be generalized to a higher geographical area like city, region or country rather than the specific street address or zip code.

Similarly, other attributes with high cardinality values (many possible values) can be "top-coded" by grouping uncommon values together. For example, instead of displaying exact salaries, incomes can be grouped into predefined bands or categories.

Generalization preserves more of the analytic utility of the data as compared to complete removal of attributes during masking. It is often used alongside other techniques for datasets that require a balanced approach between privacy, utility and feasibility of anonymization. The level of generalization depends on the sensitivity of the dataset.

-

Perturbation

Perturbation involves distorting or scrambling the actual values in the dataset in a controlled manner so that individuals cannot be re-identified directly but aggregate analyses remain relatively accurate.

For example, ages may be randomly adjusted up or down by 1-3 years, income values rounded to the nearest $1000 bracket or zip codes scrambled by a digit or two. Such minor perturbations mathematically reduce the feasibility of re-identification by linking attribute values to specific people while analytics using aggregate functions are relatively preserved.

The selection of perturbation techniques and acceptable range of distortion is an important parameter requiring domain expertise. Too little perturbation may not provide adequate privacy while too much can severely reduce the analytic utility of the data. Along with generalization, perturbation is commonly used for financial, medical and research datasets where some variability is acceptable for analytics.

-

Differential Privacy

Differential privacy expands on perturbation with a formal definition of privacy using randomness calibrated to a "privacy budget". It inserts carefully measured randomness into query results to probably restrict any individual record from significantly influencing outputs. This bounds the probability of identifying participants even by comparing responses to many analyzes.

Differential privacy is often used for open data publication when strong privacy protections are needed. It enables useful population-level insights while preventing probabilistic re-identification of individuals. Advanced algorithms are required to determine the appropriate level of noise to add. Differential privacy is a cutting-edge concept but not yet widely adopted.

-

Synthetic Data

Instead of altering real data values, synthetic data generation programmatically creates artificial yet representative records based on patterns and distributions in the original dataset. Statistical techniques are used to build machine learning models that learn the intricacies in the source. These models are then used to fabricate new data instances that plausibly could have been drawn from the same population.

Synthetic data retains analytical properties of real information for testing models and systems without privacy or compliance issues. Since it has no real individuals, there are no re-identification risks. However, it requires sophistication to model all necessary statistical relationships accurately. Synthetic data is increasingly used when strong privacy is paramount and data sharing risky like for open science and medical research.

The appropriate technique depends on factors like the nature and sensitivity of the data attributes as well as analytical and privacy requirements. Often, a combination of methods is used for layered anonymization.

Example of Anonymized Data

To demonstrate how this works in practice, here is a hypothetical example of anonymized data records before and after anonymization:

Before anonymization:

| Patient ID | Patient Name | Patient DOB | Address | Medical Condition |

|---|---|---|---|---|

| 1234 | John Doe | 01/01/1980 | 123 Main St, City, State | Heart Disease |

| 2345 | Jane Smith | 02/15/1985 | 456 Elm Ave, City, State | Diabetes |

After anonymization:

| Patient ID* | Name | Date of Birth | Address | Medical Condition |

|---|---|---|---|---|

| Patient ID* | Name1 | 1980 | Region 1, State | Cardiovascular Condition |

| Patient ID* | Name2 | 1985 | Region 2, State | Endocrine Disorder |

*Patient IDs are now randomized strings rather than actual IDs.

Direct identifiers have been removed (names, addresses) or generalized (dates, locations) while diagnostic detail remains to retain analytical utility for research purposes in an anonymized format protecting individual privacy.

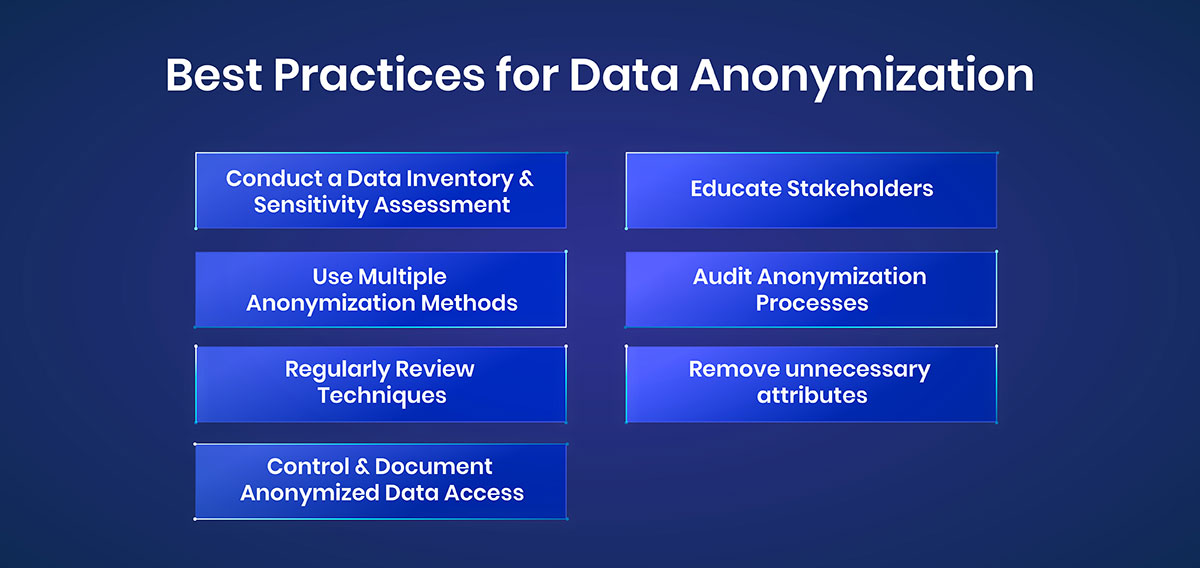

Best Practices for Anonymization

To maximize the effectiveness of data anonymization programs, organizations should adopt the following key best practices:

- Conduct a Data Inventory & Sensitivity Assessment: Understand what data is collected, classifications, linkages, where/how it flows and levels of sensitivity.

- Use Multiple Anonymization Methods: A combined approach applying different techniques provides stronger protection against re-identification attacks.

- Regularly Review Techniques: Anonymization must evolve with new privacy risks and analytical approaches to maintain standards over time.

- Control & Document Anonymized Data Access: Strict access governance ensures only approved, privacy-preserving uses while enabling oversight.

- Educate Stakeholders: Foster a culture where data custodians and users understand their role upholding anonymization policies and protocols.

- Audit Anonymization Processes: Regular reviews check techniques, access controls and oversight mechanisms to identify issues and areas for improvement.

- Remove unnecessary attributes: Only retain data attributes necessary to meet use case/analytical needs. The fewer quasi-identifiers, the stronger the anonymization.

Following best practices helps address complexities, reinforces accountability across organizational functions and builds trust that privacy is taken seriously in data-driven initiatives.

Challenges of Data Anonymization

While a crucial privacy technique, data anonymization is not without challenges that must be comprehensively addressed:

- Re-identification Risk: It may still be possible in some cases to reverse engineer large datasets and combine attributes to pinpoint individuals, especially with outside information.

- Data Utility Trade-off: Stronger anonymization protecting privacy could excessively strip details and limit analytical value for research, products etc. Weaker approaches raise privacy risks.

- Evolving Techniques: Anonymization must continuously adapt to address new types of emerging data, evolving re-identification methods and changing use scenarios over time.

- Regulatory Compliance: Interpreting and abiding by privacy regulations across jurisdictions adds complexity requiring diligent oversight of anonymization practices.

- Operationalization at Scale: Implementing high-quality, consistent anonymization across huge volumes of data in disparate formats from various sources strains technological and staff capacity.

Effective risk assessment and mitigation, ongoing evaluation and refinement help address challenges to privacy-protective data analysis at the immense scales involved in today's digital landscape. No approach will completely eliminate risks, but anonymization manages them responsibly when properly deployed.

Conсlusion

In an era of ubiquitous data collection, anonymization provides an essential tool to enable analytical innovation and business insights using personal information, while upholding ethics, building confidence and abiding both the letter and spirit of privacy regulations. When implemented carefully according to best practices, and with diligent risk management, it strikes a workable balance so individual privacy rights and data-driven progress can co-exist productively.

Looking ahead, anonymization will remain a crucial consideration for ethical data practices that do not compromise identity yet recognize privacy as a human right even in large-scale, commercially valuable data reservoirs.