Data is powering digital transformation and fueling сompetitive advantage across every industry. However, that data is useless if companies don’t assess it and put it to work. This is where data ingestion сomes in - the сritiсal process of moving data from where it lives into the platforms, analytiсs engines, and applications that drive intelligence and innovation.

In short, data ingestion unloсks data’s potential. When done right, it sets the stage for game-сhanging analyses. Here’s a deeper look at what data ingestion entails, why it’s so vital, and how to implement it effectively.

What is Data Ingestion?

Data ingestion refers to the transfer of information from one or more source systems into a target datastore or application built for further proсessing, analysis, and use.

The sources feeding data may include:

- Databases

- IoT devices

- Cloud apps

- Social media

- Web apps

- ERP systems

- And more

The receiving platforms are typically:

- Data warehouses

- Data lakes

- Streaming analytics hubs

- Business intelligence tools

- Data science modeling environments

- Applications in need of data

So, in simple terms, data ingestion moves raw information from all the systems collecting it into centralized destinations where it can drive intelligence and value.

This sets data up for:

- Storage and management

- Cleanup and organization

- Analytics and Reporting

- Training machine learning models

- Embedding into apps and workflows

Without data ingestion, none of these vital activities can happen. Information remains scattered in silos rather than unified for activity. So, every aspect of data-driven business depends on efficient, reliable data movement.

Types of Data Ingestion

There are a few core methods for getting data from external sources into centralized internal systems. Here are some data ingestion types:

-

Batch Processing

Batch data ingestion gathers defined data feeds at regular intervals in chunks. This could mean pulling in last night’s sales data every morning or aggregating device sensor records every hour. Batches are then processed and loaded in groups.

Batch loading works well for high-volume, predictable data streams where some latency is acceptable. The patterns make planning and resource allocation easier. But insights depend on batch cycles completing. -

Real-Time Streaming

For data that is highly time-sensitive, real-time data ingestion uses streaming to instantly pipe events, observations, and changes from sources. Rather than wait for batch windows, streaming allows continuous flow directly into target systems.

This could include stock trades, IoT sensor readings, or clicks happening on a website. The low latency enables rapid response to emerging trends, issues, and opportunities uncovered in data. Apps and predictions are dependent on constantly refreshed data and rely on streaming. -

Change Data Capture

Change Data Capture (CDC) is a special form of real-time data ingestion geared to transaction systems like databases. CDC watches for and identifies incremental updates, inserts, and deletions that happen in source databases through log scanning. Only this changed data is streamed to destinations, keeping overhead low.

So rather than re-scan entire databases repeatedly to find modifications, CDC spots and streams edits made to tables and records as they occur. This makes the CDC highly efficient for ingesting live changes from crucially important systems of record into data platforms.

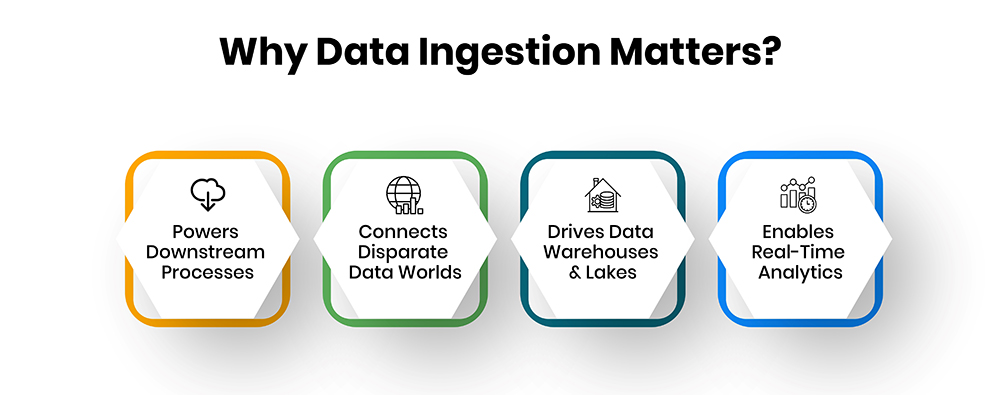

Why Data Ingestion Matters?

Getting the right data pipelines in place is crucial because data ingestion:

-

Powers Downstream Processes

All data analytics and applications fundamentally depend on access to data - and plenty of it. Data ingestion kicks off this pipeline that all other critical data operations rely on.

Without properly ingesting data from the start, data teams can’t clean it, store it, analyze it, or otherwise gain value. The business loses out on the insights and innovations that data makes possible. -

Connects Disparate Data Worlds

Most companies now run a multitude of disconnected systems capturing valuable data - CRM software, marketing applications, IoT devices, operational databases, and more. Data ingestion provides the glue to integrate these disparate worlds.

It provides a unified information supply consolidated from across all the organization’s data pockets. This single reliable feed powers functionality and analytics. Data ingestion breaks down silo walls to fuel enterprise intelligence. -

Drives Data Warehouses & Lakes

Two common consumption targets for ingested data are data warehouses and data lakes. Both serve as reservoirs supplying analytics engines, BI tools, and data science efforts.

Data ingestion pipelines keep these data hubs refreshed by constantly pumping in new structured information from transaction systems, applications, devices, and external sources. This powers everything from dashboards to predictive models to AI needing large volumes of clean data. -

Enables Real-Time Analytics

Streaming data ingestion opens the door for instant analytics rather than delayed insights. By piping observations, events, and changes directly into analytics platforms and data science environments, teams can uncover and act on emerging trends and moments of opportunity.

Rather than wait for batched data to arrive, real-time ingestion analysis means crucial new signals trigger immediate alerts for attention. This can drive ultra-responsive applications, dynamic operational decisions, and timely predictions.

The Foundation for Innovation

At a higher level, reliable data ingestion lays the groundwork enabling all data-driven innovation - which today gives companies their competitive edge. Data powers the most impactful business improvements and disruptive new offerings.

However, absent effective, scalable data ingestion, teams can’t leverage information to inform opportunities and advantageous risk-taking. All data pipeline outputs fueling transformation rely on quality inputs ingested from across the data landscape.

Challenges with Data Ingestion

As critical as it is, data ingestion comes with common pain points, including:

-

Data Volume Overload

Data volume, variety, and velocity are exponentially increasing from more sources than ever. Simply keeping pace with ingestion demands already strains resources. Scaling pipelines responsively risks falling further behind. -

Security & Compliance Pitfalls

Each new data source introduces potential security holes and compliance exposures if not managed carefully. Teams must account for policies like GDPR and PCI while also protecting flows. Lapses lead to breaches and violations. -

Unplanned Downtime

Inconsistent data flows wreak havoc on functions expecting them. Network blips, unexpected system changes upstream, and even source app crashes can suddenly break ingestion. Plans for resilience are crucial. -

Dependency Sprawl

Complex interlinked architectures make adjusting ingestion highly risky. Uncoordinated changes across owning teams can create catastrophic upstream and downstream effects that are difficult to anticipate. Careful coordination is key. -

Silo Persistence

Getting access to source data controlled by other groups can be a battle. Political holdouts who won’t share data lead to incomplete datasets and misleading perspectives. Manual interventions create bottlenecks. -

Rising Costs

Maintaining high performance at scale means optimizing infrastructure for peak capacities. And spikes are increasingly expensive to accommodate. Allocating required capital expenditure is an executive sales job.

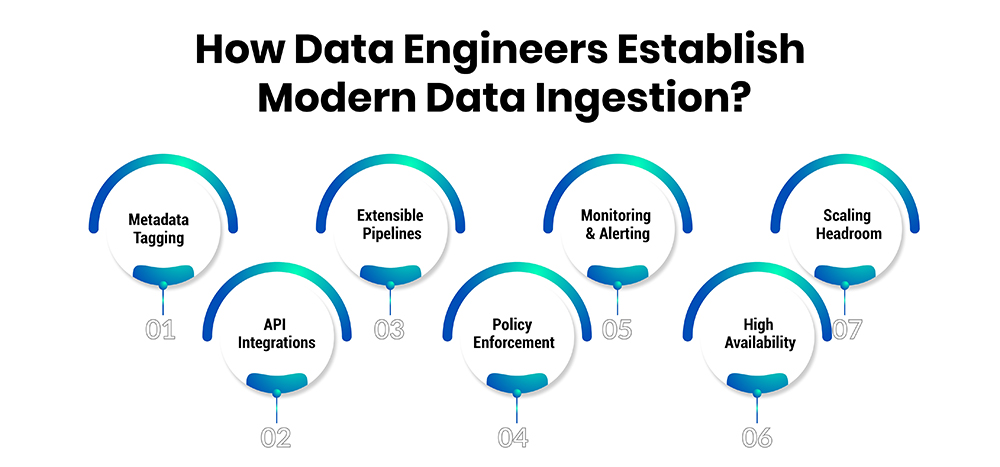

How Data Engineers Establish Modern Data Ingestion?

Capable data engineers are invaluable for tackling the many complexities of instituting resilient data ingestion. They apply architectural principles and platform capabilities orchestrating robust data flows, including:

-

Metadata Tagging

Tagging datasets with standardized descriptive metadata enables organization, adding context for transformations and destination consumption. This facilitates automation through later handling stages. -

API Integrations

Leveraging rich platform APIs simplifies connecting diverse data environments, abstracting underlying implementation, and facilitating interchangeability. Well-designed APIs encourage secure reuse. -

Extensible Pipelines

Configurable pipeline templates codify repeatable best practice processes for common integration needs - while still allowing customization. These accelerate the delivery and maintenance of reliable data flows. -

Policy Enforcement

Forward-thinking platforms centralize control points by applying rules governing flows for security, compliance, and operational safeguards. Checking against centrally managed policies reduces risks. -

Monitoring & Alerting

End-to-end visibility into pipeline status, data quality, and runtime metrics is crucial for performance, uptime, and issue prevention. Proactive alerting on early warning signs minimizes downstream disruption. -

High Availability

Multi-region deployments, redundancy, failover, and recovery procedures prepare for and withstand component failures and disasters. Meeting service-level demands means planning for worst-case scenarios. -

Scaling Headroom

Cost consciousness balanced with infrastructure overhead for traffic spikes enables support for exponential data growth. Cloud data integration platforms provide options for optimized scaling flexibility.

With these leading practices for resilient data ingestion in place, the vital first mile of the data pipeline provides a solid foundation for game-changing analytics innovation.

Conclusion

With reliable data ingestion powering analytics engines and data-driven applications, companies gain competitive advantages through actionable intelligence. They can rapidly uncover and respond to customer and operational signals, power dynamic decision automation, feed predictive models, and embed intelligence in offerings.

In conclusion, by effectively extracting and loading data across their technology ecosystems, organizations set the stage for game-changing innovation. Smooth data ingestion provides the lifeblood, enabling everything from real-time alerts to AI assistants to visionary new business models. For any company pursuing a data-driven digital strategy, tackling data ingestion is among the most enabling investments they can make.