In the modern world full of data and information the knowledge of SQL for data analytics is essential for proper data analysis. With business decision-making shifting to data-driven, knowledge of SQL techniques for data analysis enables the analyst to manipulate, filter and transform raw data into useful information. This article covers the proactive way of presenting data analysis by using advanced SQL techniques and offers a step-by-step approach to improving the speed of your queries and their accuracy.

Building and Configuring Your SQL Environment for Data Analysis

Before using SQL for data analytics, you need to prepare the ideal environment. Whether you're using MySQL, PostgreSQL, or SQL Server, the right installation and configuration setup will provide an efficient workflow and analysis process.

Key Steps for Environment Setup:

- Choose the Right Tool: Select a database management system (DBMS), such as MySQL, PostgreSQL, or SQL Server, according to the particular requirements of your project.

- Install and Configure: Now, it’s time to download and install your picked DBMS, so you can configure it to hook up to your local or cloud database.

- Create a Database: Begin by launching a fresh database where you will hold your information. Your analysis will have this as its core.

- Import Data for Analysis: Load your datasets into the database, whether they come from CSV files or other data sources.

Essential SQL Techniques for Data Analysis

Applying SQL on Data Analytics is straightforward, and it is essential to have a basic understanding of the query operations below. These core functions help analysts get the data out of a bucket, shuffle it around and count it, which makes it possible to get deeper insights.

-

SELECT and WHERE Clauses: The two most common and fundamental SQL statements are SELECT to retrieve certain fields from a set, and WHERE to filter the set based on certain criteria.

Code Snippet

SELECT name, age FROM customers WHERE age > 30;

-

GROUP BY and HAVING: When dealing with data being grouped, GROUP BY helps in organizing the rows in order of some values and can be combined. The HAVING clause is used to filter these grouped data.

Code Snippet

SELECT city, COUNT(*) FROM customers GROUP BY city WHERE COUNT(*) > 5;

-

ORDER BY: Data sorting is another common operation in data analysis*. The ORDER BY clause is used to sort the result set of a query in ascending or descending order either by one or more columns.

Code Snippet

SELECT name, salary FROM employees ORDER BY salary DESC;

Each of the above techniques is the cornerstone of constructing precise and effective queries. These are the foundations of SQL for data analysis, which allow users to easily organize, search and sort through large volumes of data.

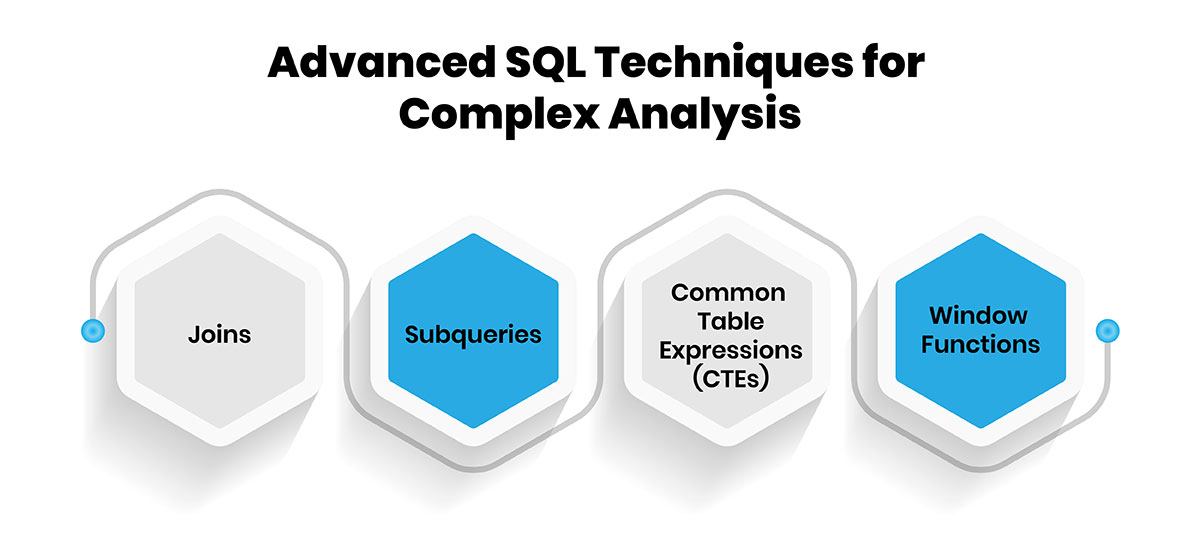

Advanced SQL Techniques for Complex Analysis

When dealing with massive sets of data, simple SQL commands are not sufficient for performing complicated tasks. Skill in Advanced SQL techniques for data analysis means you can gain more specific findings from two or more databases, improve speed, and control processes. Below are the techniques that will help you improve your analytical skills:

1. JOINS:

- INNER JOIN: Joins the rows of two or more tables where there is a common value in both tables.

- LEFT JOIN: All rows of the left table and the matching rows of the right table are returned; unmatched rows will have NULL values.

- RIGHT JOIN: Bring back all the rows on the right table along with NULLs for those rows on the left table that do not have a match.

- FULL OUTER JOIN: It gives out the rows if there is a match on table one or the other table or both.

Code Snippet:

SELECT a.column1, b.column2

FROM table_a a

LEFT JOIN table_b b

ON a.id = b.id;

2. Subqueries:

Subqueries, or inner queries, are a means of embedding one or more queries into another query to conduct more complex analyses. They are especially helpful when executing operations such as data filtering based on the result of another query.

Code Snippet:

SELECT name, salary

FROM employees

WHERE salary > (SELECT AVG(salary) FROM employees);

3. Common Table Expressions (CTEs):

CTEs are also useful in simplifying complex queries by dividing them into several parts which are easier to comprehend. They are especially useful when the same subquery has to be used several times in the given statement.

Code Snippet:

WITH EmployeeCTE AS (

SELECT department, COUNT(*) as dept_count

FROM employees

GROUP BY department

)

SELECT * FROM EmployeeCTE WHERE dept_count > 10;

4. Window Functions:

Window functions such as ROW_NUMBER(), RANK(), and LAG() help you perform calculations on a set of table rows, which are relevant to the current row. These functions are crucial for running analytics without reducing the dataset on which the analysis is being conducted.

Code Snippet:

SELECT employee_name, salary,

RANK() OVER (ORDER BY salary DESC) AS salary_rank

FROM employees;

These new techniques allow analysts to execute more sophisticated and extensive queries, thus making SQL techniques for data analysis adaptable and comprehensive for various data settings.

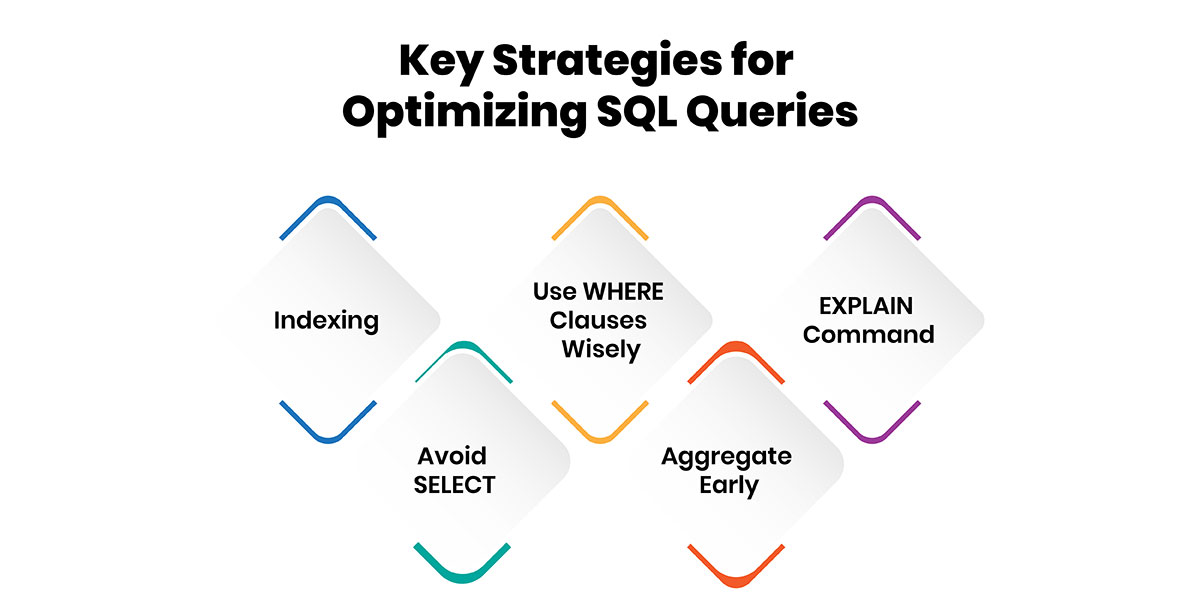

Optimizing SQL Queries for Large Datasets

When you are working with big data, you must make an optimization in your SQL query performance and efficient data analysis. As this will take a longer time to compute and use more resources and can cause us to receive the results too late.

Here are key strategies for optimizing your SQL queries:

- Indexing: Create indexes on columns often used in WHERE clauses, JOIN conditions and ORDER BY statements. Indexes can cut down the search time dramatically.

- Avoid SELECT: Instead of selecting all columns, just select the column you require. It reduces data transfer and as well as increases query performance.

- Use WHERE Clauses Wisely: Filtering your data as early as possible reduces the amount of data that needs to be processed.

- Aggregate Early: When working with GROUP BY, do the aggregations as early as possible in the query, so that you minimize the amount of data you’ll need to process later.

- EXPLAIN Command: Use the EXPLAIN command in front of your SELECT query to understand how an SQL engine executes a query and helps you find out the performance bottlenecks.

Through these techniques you can make your SQL for data analytics more efficient, so analyses will run smoothly and fast even for huge datasets.

Working with Time Series Data in SQL

The use of time series data is essential in most companies since it helps businesses understand trends, seasonality, and duration. By using time-based data, SQL is especially powerful since it easily helps the analysts make the necessary computations.

To effectively work with time series data in SQL, consider the following techniques:

- Date Functions: For manipulation and analysis of data fields the following functions are commonly used functions such as DATEPART, DATEDIFF, and DATEADD. These functions assist in categorizing data into different segments of time, day, month or even year.

-

Aggregating Data: Time series data are grouped so that you can analyze metrics over some intervals. For instance, you may summarize daily sales into monthly totals using the GROUP BY clause:

Code Snippet:

SELECT

DATE_TRUNC('month', order_date) AS month,

SUM(sales_amount) AS total_sales

FROM

sales

GROUP BY

month

ORDER BY

month; -

Rolling Averages: Use difference equations to regularize the variation of the series. This can help find trends more easily and more clearly. For example, a 7-day moving average can be computed using window functions:

Code Snippet:

SELECT

order_date,

AVG(sales_amount) OVER (

ORDER BY order_date

ROWS BETWEEN 6 PRECEDING AND CURRENT ROW

) AS moving_average

FROM

sales;

Upon applying these techniques in time series analysis using SQL, analysts can obtain the insights they need to make the right decisions at the right time based on temporal patterns.

Data Visualization with SQL and Integration Tools

Visualization is a critical aspect of data analysis since it helps analysts express the findings and conclusions with minimal confusion and misunderstanding. It’s worth admitting that SQL isn’t more than a data manipulation language, but it serves a critical purpose to shape data for visualization. With querying in SQL, you can find patterns & trends in data sets before sending them to data visualization applications.

To effectively visualize data using SQL, consider the following steps:

- Prepare Data: SQL queries can be used to find averages, minimums, maximums, groups and subsets of data. For instance, analyze sales information by region or product type, the results of which build a good foundation for the graphics.

- Exporting Results: Once you are done with cleaning your data, you carry out the process of exporting your results to products such as Tableau or Power BI. Some of the other databases are easy to connect with a tool because most of them support the direct connection to the SQL database.

- Leverage Built-in Functions: Several SQL functions help to make your visualizations more straightforward. For example, the use of CASE means that you can create tests with categories assigned according to certain criteria, making the visualization even richer.

- Dynamic Queries: Using appropriate SQL queries that incorporate input data and can change based on it helps in creating an engaging dashboard which lets key decision-makers view the information in various ways.

An example SQL query for preparing sales data might look like this

Code Snippet:

SELECT

region,

SUM(sales) AS total_sales,

COUNT(order_id) AS total_orders

FROM

sales_data

GROUP BY

region

ORDER BY

total_sales DESC;

When you have prepared your data well using SQL programming language, it becomes easier to prepare the data for visualization so that the message to be passed to the decision-makers is clear.

SQL for Predictive Analytics & Data Modeling

The use of SQL for data analytics predictive modelling allows for a better understanding of data patterns derived from past events. Data preparation is of paramount importance when developing accurate predictive models and SQL can go a long way toward the expected results. With the help of SQL commands, analysts can prepare their data for more efficient analysis by formatting it.

Key steps in using SQL for predictive analytics include:

- Data Cleaning: SQL has provisions that allow a user to search for NULL values, duplicate records, and even outliers. It plays a significant role in data quality assurance.

- Feature Engineering: Transforming the available data enables analysts to develop new variables or features for a model. For example, deriving age from the birthdate or summing up total sales from the transaction data.

- Aggregating Data: The application of a host of functions such as SUM(), AVG(), and COUNT() makes it possible to aggregate data, which is handy in cases that require models for the prediction of trends.

- Integration with Analytical Tools: Python and R can perfectly integrate with SQL. Users can transfer datasets from SQL databases to these environments where they use machine learning to make predictions.

By using these techniques, SQL not only simplifies the practical aspect of data preparation but also provides the foundation for the core of building accurate analytically based predictive models, stressing its relevance in the field of data analytics.

Conсlusion

Learning SQL for data analytics is mandatory for anyone who wants to get a better grasp of the job in the field of data analysis. Because of its high proficiency in commanding, interrogating, and combining data, it forms the basis of sound decision-making based on data. As Industries continue to require massive data for their processes, SQL’s importance will continue to rise. Thus, by further developing SQL skillfulness in data processing, one gains the best preparation for achieving the challenges of tomorrow.