Data transformation techniques are essential in data analysis since they involve turning big data from a raw form into a structured form that would be useful in an organization. Several ways of data transformation make data clean, standardized, and prepared for further analysis to provide valuable conclusions to the analysts. The correct transformation improves the model's accuracy and robustness or stability in the real world. In this article, we will discuss different data transformation techniques and the advantages they contribute to enhancing data analysis.

Core Principles and Stages of Data Transformation

Data transformation is vital for cleaning and making the most of the raw data. It involves converting data from its original form to fit into any analytical tools and enhance the quality of the insight. This process allows business professionals or researchers to make the right decisions based on the applicable data.

Stages of Data Transformation

The process typically follows these key stages:

- Extraction: Data is collected at the source in the form in which it was generated, mainly from databases, sensors, logs, etc.

- Cleaning: Identifying and correcting errors, inconsistencies, or duplicates in the data.

- Transformation: Converting and reshaping data, including reformatting, normalizing, or encoding.

- Loading: Sinking the transformed data into a system for a subsequent analysis or visualization.

Role in Data Analysis

Proper transformation ensures that datasets are:

- Consistent: Uniform physical characteristics of the variables within each variable type.

- Accurate: It increases the reliability of the analysis since it does not contain errors and repetitions.

- Readable: Reduces the time needed for data analysis so that they may be incorporated into analytical models more efficiently.

Data transformation also incorporates proper compatibility with given modeling techniques and the eventual goal of the analysis. These steps are crucial for translating raw data into intelligence; transformation is an integrated data preparation component.

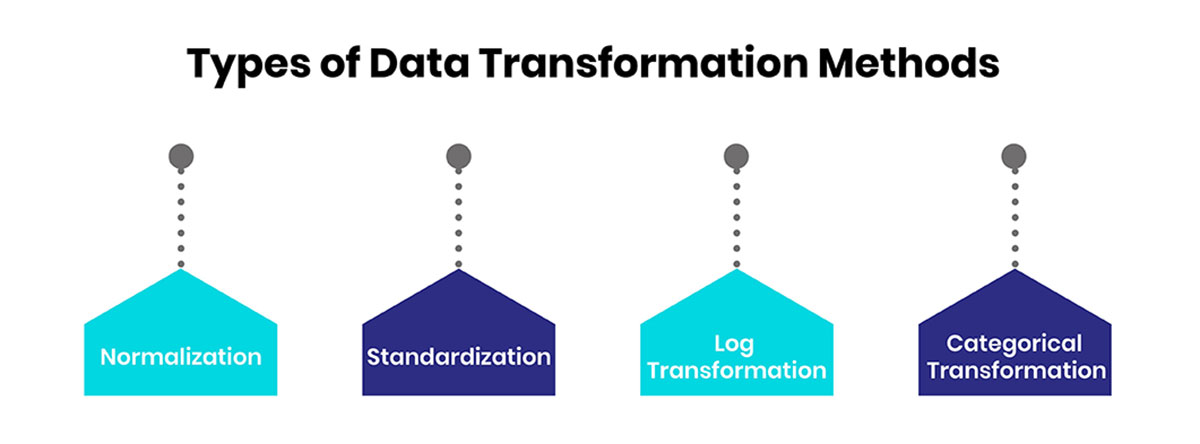

Types of Data Transformation Methods

Data transformation organizes large amounts of raw data to make it more convenient for subsequent treatments. Thus, various methods focus on such difficulties in datasets as scaling numbers, encoding categories, etc. Here are some key transformation methods:

-

Normalization

Normalization shifts numerical data points from 0 to 1 to ensure that variables are standardized for comparison. This method is more important in distance-based methods such as clustering.

Example: Transforming a dataset of ages from a scale of 0-100 to 0-1 for uniformity. -

Standardization

Standardizing the former to have a mean of 0 and a standard deviation of 1 preserves the distribution of data characteristics, which is beneficial for algorithms sensitive to data scaling.

Example: Scaling the data before conducting regression analysis. -

Log Transformation

Proper for removing skewness and setting variability in datasets with exponential distribution and significant ranges. This method is mainly applied when the distribution curve is ‘long-tailed,’ meaning outliners are in the histogram's extreme left or right part.

Example: Transforming the nature of the sales data by using log transformation to reduce the effects of outliers. -

Categorical Transformation

Converts categorical data into numerical formats to enable effective analysis and compatibility with mathematical models.

- 1. One-Hot Encoding: Converts categories into binary vectors so there is no hint of ordinal data.

- 2. Label Encoding: Labels each category with a unique integer but can promulgate artificial ordinality.

Techniques for Handling Missing Data

Data gaps are not a unique problem in data analysis since incomplete datasets can severely mislead or bias analysis. There are several ways to deal with missing data, make the analysis logical and scientific. Below are some widely used techniques:

-

Imputation: Imputation can be described as replacing missing values in data with other values best estimated based on the other values in the same dataset. Some of the most used imputation strategies are the attribute's mean, median, or mode.

Code Snippet (Imputation with Mean):from sklearn.impute import SimpleImputer

imputer = SimpleImputer(strategy='mean')

transformed_data = imputer.fit_transform(data) -

Interpolation: Interpolation is the process of estimating unknown values within a range of data by studying adjoining values of the data set. This method is beneficial for time series data or the data set with characteristics in line with a given sequence.

Code Snippet (Linear Interpolation):data.interpolate(method='linear', inplace=True)

-

Deletion: If the missing data is scattered and does not skew the results, you can delete rows and columns containing the missing values. However, this should be done safely since the method can erase data.

Code Snippet (Dropping Missing Data):# Drop rows with missing values

data_cleaned = data.dropna()# Drop columns with missing values

data_cleaned = data.dropna(axis=1) -

Using Models for Imputation: Imputation for missing data is one of the most common cases that can be performed using machine learning approaches to predict the missing component values. Such models take the available data and identify patterns with the missing entries. They can be especially effective in cases where interactions between the values of certain variables are high.

Code Snippet (KNN Imputation):from sklearn.impute import KNNImputer

imputer = KNNImputer(n_neighbors=5)

transformed_data = imputer.fit_transform(data)

All methods have their own advantages and disadvantages, and the technique to use depends on the type of data and the analysis specifications. Overall, good management of missing data means sound results and preserved quality of data analysis.

Dimensionality Reduction and Its Role

Dimensionality reduction is considered a key approach to data transformation in data analysis. It helps simplify high-dimensional imaginations without negating vital information. Enhancing calculation speed, reducing space, and avoiding overfitting problems in machine learning models are also important.

Key Dimensionality Reduction Methods:

-

Principal Component Analysis (PCA):

Provides maximum variance in the given data sets to determine the main components of a dataset with minimal loss of essential attributes for data dimensionality reduction.

Code Example (PCA):from sklearn.decomposition import PCA

import numpy as np# Sample dataset PCA

data = np.random.rand(100, 10)# Apply PCA PCA

pca = PCA(n_components=3)

reduced_data = pca.fit_transform(data)

print("Reduced Data Shape:", reduced_data.shape) -

Linear Discriminant Analysis (LDA):

Concerned with enlarging the margin between classes in classification cases by mapping data to a smaller dimensional space.

Benefits of Dimensionality Reduction:- Enhances model accuracy by removing noise and irrelevant features.

- Reduces storage and processing requirements.

- Mitigates risks of multicollinearity in the dataset.

from sklearn.decomposition import PCA

from sklearn.datasets import load_iris

import pandas as pd# Load sample dataset

data = load_iris()

df = pd.DataFrame(data.data, columns=data.feature_names)# Apply PCA to reduce dimensions to 2

pca = PCA(n_components=2)

reduced_data = pca.fit_transform(df)print("Reduced Data:\n", reduced_data)

-

Z-Score Method: A Z-score of more than 3 or less than -3 is usually considered to be an outlier.

Code Snippet:z_scores = (data - data.mean()) / data.std()

outliers = data[abs(z_scores) > 3]IQR (Interquartile Range) Method: Outliers are any numerical values that fall within a range of 1.5 times the IQR, below the third quartile, or above the first quartile.

For noise which is defined as the random variations in the data and are irrelevant, the smoothing methods can be adopted. One common method is the moving average for continuous data, which reduces fluctuations:

Code Snippet:data_smooth = data.rolling(window=3).mean()

Feature Engineering and Its Impact

Feature engineering is an important step in data transformation that improves the data used in analytical models. This type of analysis closes the gap between raw data sets and analyzed features for more accurate and effective analyses of different data sets. Below are the key aspects of feature engineering:

-

Creating New Variables: Selecting probability features and normalizing the derived variables or building aggregations from them.

Example: Deriving a feature from total_sales by summing up several columns, let it be monthly sales, in a dataset.

Code Snippet:data['total_sales'] = data['january_sales'] + data['february_sales'] + data['march_sales']

-

Feature Selection: Filter and select only the specific attributes needed without duplicity and excess in the analysis.

Example: Selecting the top 3 features based on correlation with the target:

Code Snippet:from sklearn.feature_selection import SelectKBest, chi2

selected_features = SelectKBest(chi2, k=5).fit_transform(X, y) -

Encoding Techniques: Categorizing nominal data into numerical form. For instance, label encoding relates categories with integer forms, while one hot encoding generates dummy attributes where each has only two states.

Example: One-Hot Encoding for categorical data:

Code Snippet:encoded_data = pd.get_dummies(data, columns=['category_column'])

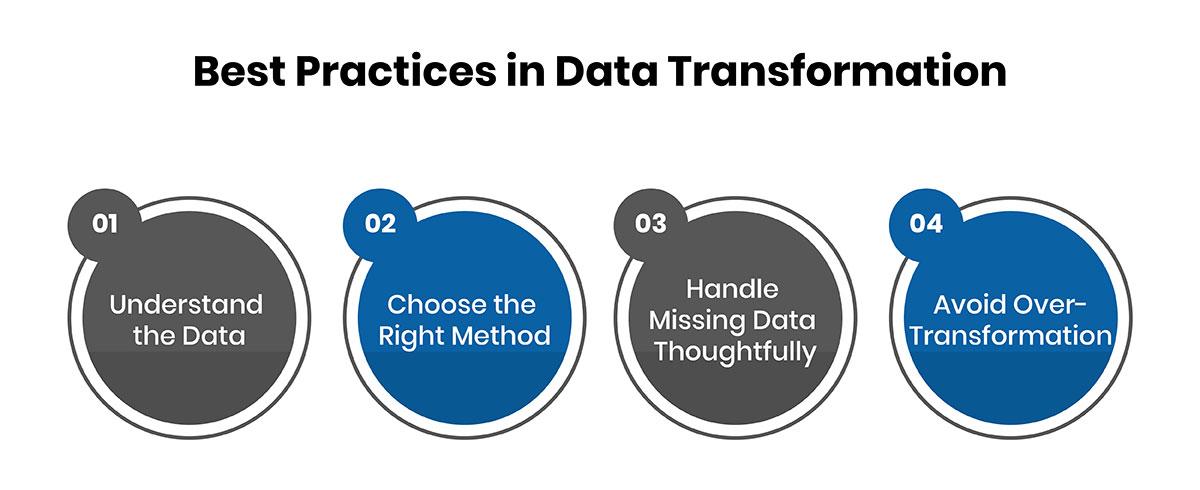

Best Practices in Data Transformation

Applying best practices using data transformation techniques results in better, more credible, and relevant information. The selection of appropriate transformation methods can significantly enhance model performance and outcome. Below are key considerations to follow:

- Understand the Data: It is always good practice to examine some data set properties before applying any transformations. This involves analyzing variables as categorical or continuous, and normality checks include missing data and outliers.

- Choose the Right Method: Each transformation method should be chosen appropriately based on the data type and problem. For instance, normalization should be applied to data with different ranges, while standardization should be used to data with a normal distribution.

- Handle Missing Data Thoughtfully: Make sure there is a practical plan for handling the data that are not available. The more suitable imputation method depends on the type of data and the statistical and advanced predictive methods of imputation.

- Avoid Over-Transformation: Too many transformations can also cause information loss. Balance the transformed data to prove that it makes sense as intended by the original data.

Conсlusion

Data transformation techniques play a critical role in improving the value of data by making it accurate and properly formatted for analysis. Such processes include normalization, handling of missing data, feature reduction, and feature engineering, which can improve the quality of data and the performance of analysts' models. Adding outlier handling and noise reduction steps sharpens the final dataset at multiple levels. They enable organizations to obtain reliable information and efficiently handle numerous data types.