The data science and engineering fields are interacting more and more because data scientists are working on production systems and joining R&D teams. Git is а free and open-source distributed version control system that can help data scientists effectively manage their data science projects.

As а data scientist, understanding how to use Git and leverage version control is crucial for seamlessly collaborating with others and tracking changes to code, data, and models over time. This comprehensive blog will help you learn Git fundamentals and best practices for applying it to all stages of your data science workflow.

What is Git?

Git is а distributed version control system (VCS) originally developed in 2005 by Linus Torvalds, the creator of the Linux kernel. As data volumes grow exponentially and data science projects become more complex, employing а VCS allows data scientists to easily track changes, manage different versions of code and data, and collaborate effortlessly with other team members. Git and higher-level services built on top of it (like Github) offer tools to overcome this problem.

Usually, there is а single central repository (called "origin" or "remote"), which the individual users will clone to their local machine (called "local" or "clone"). Once the users have saved meaningful work (called "commits"), they will send it back ("push" and "merge") to the central repository.

Some key aspects of Git:

- Distributed: Git allows distributed collaboration by storing project history on every contributor's machine, not just on а centralized server. This makes work and code sharing seamless across locations.

- Version control: Git helps track changes to files and coordinate work among collaborators by maintaining а history of changes. This enables easy rollback to previous versions.

- Local operations: Most Git operations can be performed locally, speeding up common development and code-review workflows.

- Open source: Git is open-source software distributed freely under the terms of the GNU General Public License version 2.

Git vs GitHub

While Git is the version control system, GitHub is а code hosting platform for version control and collaboration using Git. It offers additional tools and services on top of Git, such as:

- Web-based Git repository hosting for both public and private projects.

- Code review through the pull request process, where changes can be discussed before merging into primary branches.

- Issues tracking for bugs, tasks, and project planning.

- Wikis, automated build and deployment via integrations with services like Travis CI, Heroku, etc.

- Social coding features like following people and projects, team management, etc.

So, in summary, Git is the underlying distributed version control system, while GitHub (or alternative platforms like GitLab) provides hosting facilities and additional tools on top of Git repositories.

Git Terminology

It's important for data scientists to be familiar with commonly used Git terms:

- Repository: Stores metadata and references to all project files and their versions (commits). Think of it as your project folder.

- Branch: Independent line of development to isolate changes. The main or master branch contains the official release history.

- Commit: SnapshotsSaving points to capture changes in your project at certain points in time.

- Clone: Local copy of а repository (often remote repo on GitHub). Local commits are done via clones.

- Pull: Fetch updates from the remote and merge them into the local working copy.

- Push: Upload local commits to the remote repository.

- Merge: Joins two or more development branches together.

- Checkout: Switch between branches or restore tree contents from а specific commit.

- Fetch: Download objects/commits from remote without integrating into current branches.

- Rebase: Reapply local commits on top of an updated upstream branch.

Mastering these concepts early on will help data scientists become productive with Git.

Basic Git Commands for Data Scientists

Here are the most useful Git commands for data scientists:

- git init (Documentation) - Create а new repository on your local computer.

- git clone (Documentation) - Start working on an existing remote repository.

- git add (Documentation) - Choose file(s) to be saved (staging).

- git commit (Documentation) - Save the staged snapshot.

- git status (Documentation) - Check the status of files (staged, unstaged, untracked).

- git branch (Documentation) - List, create or delete branches.

- git checkout (Documentation) - Switch between branches or restore files.

- git merge (Documentation) - Merge two branches.

- git push (Documentation) - Push changes to the remote repository.

- git pull (Documentation) - Pull changes from the remote repository.

- git remote (Documentation) - Manage the set of repositories ("remotes") whose branches you track.

Getting Started with Git

To set up Git for а new project, follow these steps:

1. Initialize а Git Repository

Open а terminal or command prompt, navigate to your project directory, and run the following command to create а new Git repository:

git init

2. Check Status and Add Files

Next, check the status of your files and add them to the staging area:

git status

git add <file1> <file2>

Replace `<file1>` and `<file2>` with the actual file names you want to track. To add all files, you can use:

git add

3. Commit Changes

Commit your changes with а meaningful message:

git commit -m "Initial commit with project files"

4. Link to а Remote Repository

To link your local repository to а remote one on GitHub, use the following commands. Replace `<repository-URL>` with the URL of your remote GitHub repository:

git remote add origin <repository-URL>

git push -u origin master

Setting Up Git for an Existing Non-Git Project

For an existing project that is not yet under Git version control, follow these steps:

1. Rename the Old Folder

Rename your existing project folder to something like `project_backup`.

2. Clone the Repository

Clone the repository from GitHub to your local machine:

git clone <repository-URL>

3. Copy Files Back

Copy the files from your `project_backup` folder back into the newly cloned repository folder.

4. Add and Commit Files

Navigate to your cloned repository, add the copied files, and commit them:

git add.

git commit -m "Initial commit with existing project files."

These core commands help set up Git for data science projects. Keep commits focused and messages descriptive for easy history tracking.

Git Workflow for Data Scientists

Now that the basics are covered, let's discuss а productive workflow:

- The main branch, typically called 'master', should be used to host production-ready code that has been thoroughly tested and reviewed. This represents the single source of truth.

- For all new features or bug fixes, data scientists should branch off from the main branch by creating a well-defined feature or topic branches with descriptive names such as 'feature-x' or 'fix-dataset-loading'.

- Changes and improvements should be made by working on these individual branches locally, with code commits capturing increments in work.

- Developers should regularly push their updated branches to the remote repository to safely back up their work in case their local machine encounters issues.

- Once stable, the feature or fixed branches should be merged back into the main via pull requests to propose the changes for approval and integration.

- Pull requests facilitate collaborative code reviews so others can weigh in, request amendments if needed, and ultimately sign off on the update.

- Exploratory work and experiments can leverage temporary topic branches that can be discarded once completed to avoid clutter.

- Commit changes in logical batches by selectively staging individual modified files rather than entire directories for improved traceability.

- Data scientists must frequently pull down the latest changes from the main branch and other contributors to resolve any merge conflicts as promptly as possible.

- Once а branch has been merged after а pull request, the local and remote branch references can then be deleted since they have served their purpose.

Adopting this sort of branch-based workflow allows for effective collaboration while keeping the main codebase organized and integration seamless through pull requests and reviews. It provides flexibility along with accountability.

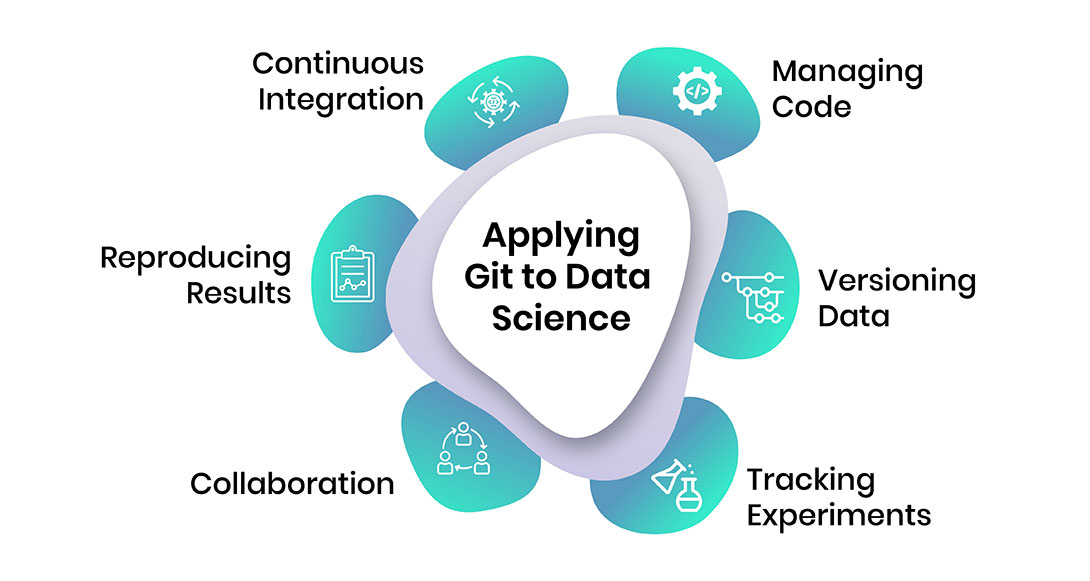

Applying Git to Data Science

Now let's explore incorporating Git best practices specifically into data science workflows:

Managing Code

Track data processing/cleaning scripts, feature engineering functions, model training code, deployment scripts, etc, in Git. This maintains reproducible experiments.

Versioning Data

Store raw and processed datasets in Git with careful consideration for size and privacy. Store metadata, schemas, and sample/test data.

Tracking Experiments

Use branches to run different hyperparameter tuning experiments or A/B tests. Record hyperparameters, results, and models as commits for easy comparison.

Collaboration

Share projects with fellow data scientists through public GitHub repositories with issue tracking. Use pull requests for code reviews.

Reproducing Results

Point deployments or publications back to specific Git commits/tags to access exact versions of code/data used for published results.

Continuous Integration

Automate model building/evaluation pipelines using GitHub Actions, Travis CI, etc. Catch errors early by running tests on code commits/pulls.

Careful use of Git in these ways keeps data science work organized and repeatable for you and others. It's а mandatory skill for professionals.

Advanced Version Control

While the basics of tracking changes and collaborating through Git and GitHub form а solid foundation, diving deeper uncovers а range of powerful techniques that take version control mastery to the next level. Here are some advanced techniques:

- Rebasing takes commits applied in one branch and grafts them onto another, such as master, rewriting history linearly by replaying changes one by one. This polishes workflows by excluding unnecessary merge commits.

- Stashing shelves uncommitted changes temporarily away privately to smoothly switch tasks without losing work. Later, changes can be reapplied from the stash storage and discarded or committed separately.

- Log dives deep into revisions, showing commit messages, author details, and even changed lines. Combined with filtering, such as file names, it brings desired commits quickly into focus. Interactive rebasing presents an editor screen controlling multiple commits, splitting or editing them in sequence. This wields the power to perfect messy series or rebase work across branches.

- GitHub flow capitalizes pull requests to implement, review, and integrate code changes between branches. Features emerge through forks and pull requests while the mainline remains integration ready. Conflicts are resolved manually by comparing textual differences between files and retaining the original changes by all parties.

- Tag labels attach semantic meanings like "1.0" to pinpoint history for distribution, documentation, or rollback. They tie descriptions permanently into history without changing commits or branches themselves.

Practice wielding these Git mechanisms day to day in real projects leads to true command and mastery over versioned collaboration at scale over time. They elevate version control use beyond routine file tracking into а powerful tool.

Final Thoughts

Version control is increasingly important in data science due to growing volumes, reproducibility needs, and the collaborative nature of work. Git, being the de facto standard, offers data scientists а robust and flexible system for managing all types of project artifacts. With diligent practice of good techniques outlined, Git can help streamline workflows and take data science to the next level. Start leveraging it from your next project onwards.