In 2021, we saw rapid adoption of cloud computing for AI and ML applications, driven by the increasing popularity of cloud-based data lakehouse or data warehouse. With the increasing amount of data being moved to the cloud, businesses faced a difficult architectural decision: store particular and organized data in a high-cost data warehouse, where you could execute high-speed analytics at a good price/performance or make use of the data lake to keep all data, unstructured and structured with a lower price but with no built-in queries or analytics tools.

As data science advances, AI and machine learning will soon influence every sector. According to Nvidia, there exist approximately 12,000 AI startups around the globe. This is a crucial fact to consider in the next decade of the 2020s. It's time to recognize an AI explosion of possibility, resulting in a scalable AI and behavioral change to humans that can adapt to the new world.

The latest trends and predictions in Data Science to look forward to in 2022

Data Science is an exciting area for researchers because it is increasingly interconnected with how the next generation of businesses, society, governance, and policy operates. Although it's among the many terms learners use frequently, the concept is easy to define. Moreover, it's an interdisciplinary field that employs methodologies and algorithms, processes, and systems to gain knowledge and insight from unstructured and structured data and apply information and insights that can be applied to data in a wide range of applications. Therefore, data science is linked to the explosion of Big Data and optimizing it for human advancement, machine learning, and AI systems.

The year 2021 was defined by many ups and lows; however, it was better for most people than 2020. Life was gradually returning to normal, with nice traditions being observed. Here are the trends that will grow data science in 2022

1. Organizations will accelerate their culture transformation programs.

Companies will accelerate their culture transformation initiatives. Data culture refers to the habits and beliefs of individuals who practice, value, and promote data to aid in the quality of their decisions. It is the basis and the mindset that companies need to maximize the value of the ever-growing data sources. However, the absence of a data culture is an obstacle in your organization's path to becoming data-driven. According to a recent study, Big Data and AI investments have reached a level where it's nearly universal, with 99 percent of companies having active investment in these areas and 91.9 percent of them reporting that the pace of investment is growing. Despite the amount invested, companies are struggling to gain value from the benefits of their Big Data and AI investments and transform into data-driven companies.

The following conclusions from a 2021 survey illustrate the issues that companies face:

# 48.5% are driving innovation through data.

# 41.2% are competing on analytics.

# 39.3% are managing data as a valuable business asset.

# 30% have a clearly-defined information strategy for their business.

# 29.2% are experiencing transformation business results.

# 24.4% have created a data culture.

# 24% have built a data-driven organization.

There is a lot of room for improvement and growth. Afterall being an enterprise driven by data is the beginning of a new process. However, for the fifth consecutive year, executives have reported that the cultural issues and not technological challenges constitute the greatest obstacle to the successful implementation of data-driven initiatives and the biggest obstacle in achieving business results.

2. NLP leads the way for the next gen of low-code data tools.

In its Build Developer conference, Microsoft unveiled its first features of a product powered by GPT-3, the super-powerful natural language model created by OpenAI, which helps developers create apps without learning how to create formulas or code on computers.

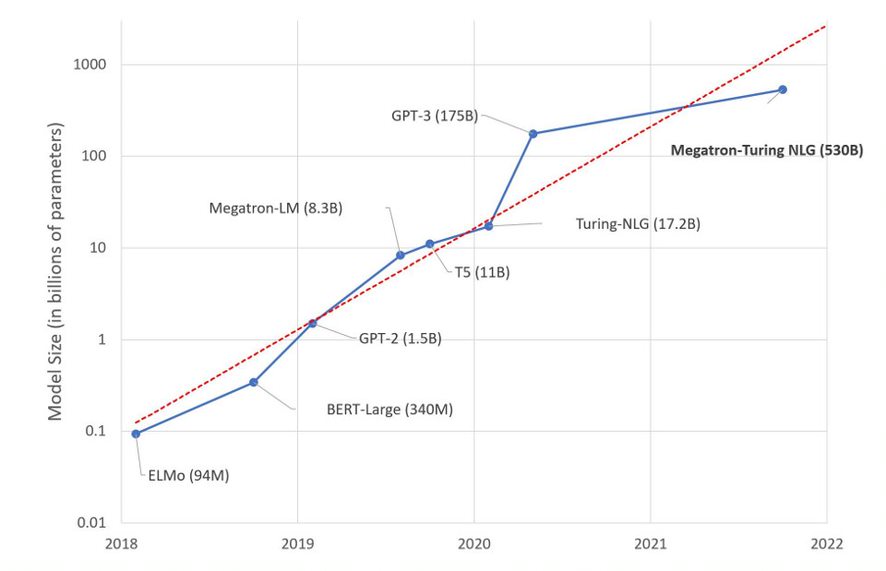

NLP has seen tremendous growth over the last few years due to the race to build ever-expanding large-language models (LLM) such as T5, GPT-3, and Megatron-Turing NLG.

GPT-3 is being integrated into Microsoft Power Apps, the low-code app development platform that assists all types of users, from those with little or no experience in coding - also known as "citizen developers" - to professional developers who have a deep understanding of programming, to create applications that improve the efficiency of businesses or processes. These include apps for reviewing gift donations to non-profit organizations, controlling travel during COVID-19, or cutting down on the amount of time needed to maintain wind turbines.

LLMs push the boundaries that NLP can accomplish. The latest models astonished people by their ability to create diverse text types (like the computer code and Guitar tabs) without explicit instructions or prior training. Here is how they've grown over the years:

3. Organizations will increase their data governance

The increasing demand for self-service analytics has also led to the increase in the need for accurate, actionable, high-quality data. Nonetheless, the difficulty of assessing and maintaining data quality grows according to the complexity and size of the data. This is why companies are adjusting their data governance practices.

One example of such a strategy is incorporating data observability in data pipelines. Simply put, data observability seeks to pinpoint, solve and troubleshoot data-related issues in near-real-time. From 2022 onwards, more companies will increase the size of their data governance plans and adopt modern tools to detect and monitor data quality issues.

4. MLOps will continue to evolve within organizations

Machine Learning Model Operationalization Management, also known as "MLOps," concentrates on the lifecycle of model development and usage, i.e. machine learning model operationalization and deployment. MLOps is a set of practices that combines data engineering, machine learning, and DevOps.

Companies can extract value from machine learning in a massive way by using production-level AI systems. This is why the market for MLOps is anticipated to rise dramatically. In reality, the market is expected to be valued at $126.1 billion in 2025. In the coming year, tools for MLOps like KubeFlow or the MLFlow are expected to mature. It's only a matter of time until they are a standard for all data science teams.

5. Data mesh is gaining momentum

Today, most data architectures are in the form of data lakes. It could change as a new kind of data architecture for addressing the shortcomings of data lakes. A new concept, invented by Zhamak Dehghani, is known as "the Data Mesh. A data mesh is a collection of "data products"-each managed by a cross-functional group of product managers and data engineers. Adopting a data mesh lets companies offer data faster and achieve better business agility in their domain.

Shortly, as the difficulties associated with using data lakes grow less severe, businesses will start to experiment with data mesh, as did Zalando along with Intuit.

6. Resolving the growing concerns about data quality

Access to huge big data datasets is required in a lot of key scenarios in data science. From implementation of machine learning (ML) algorithms that monitor network security devices to Enterprise Resource Planning (ERP) applications, all of them require access to huge databases of big data.

Even though many organizations have been collecting and locating the data needed to power their tools, they haven't always put quality data management as one of their agendas.

2021 was among the first years in which the improvement in data quality began to be a focus area. However, some organizations don't think their data is safe or useful.

Tendu Yogurtcu, CTO of Precisely, believes that initiatives to improve data quality will not stop in the coming year but will increase as more companies’ express concern about their reliability data in 2021.

"Data quality and integrity of data will remain major issues for businesses by 2022." Yogurt said. Companies are becoming increasingly dependent on data, which is obvious, but the main concern is data integrity, not just quantity.

"And even though most businesses have built a solid base for making decisions based on data, they are also experiencing difficulties in ensuring data integrity on a large scale. For example, 80% of the chief data officers surveyed by Corinium have issues with data quality which hinder integration.

"Businesses can enrich their data by incorporating contextual information from data of third parties and eliminating data silos providing better-quality data to their business."

7. Leaning on AI to monitor network performance

Artificial Intelligence applications are growing across all industries like process automation, cybersecurity, and customer service. However, AI is typically utilized as a supporting technology to improve existing solutions like workflows, campaigns, and dashboards. Very few companies have employed AI to replace all of these tools completely. Jeff Aaron, VP of enterprise marketing at Juniper Networks, believes that 2022 could witness AI becoming the standby technology in monitoring networks, including administrative dashboards.

"AI-driven assistants will replace all monitoring, troubleshooting, and management processes within network systems," Aaron said. "They claim that video killed the radio star, and now, artificial intelligence, as well as natural language processing along with natural language processing (NLU), will end the dashboard star.

"Looking ahead The days of scouring and pecking at charts are going to the wayside since you can type your question and receive answers or issues identified for you and, in certain cases, even resolved on their own, which is called self-driving. "

"You're likely to see an increase in Artificial Intelligence-driven assistance replacing dashboards and changing the methods we use to troubleshoot and solve problems, effectively eliminating the swivel chair interface."

8. Localization meets globalizations in data compliance

New global regulations on data and compliance deadlines are already being planned for the coming few years, and there are more to be added. Many businesses have concentrated on their specific regulatory requirements. However, as global corporations expand into new markets with strict policies, localized compliance with data and management will become more important in 2022. Sovan Bin, CEO of Odaseva, stated that the emergence of new global regulations would demand the companies to take action.

"Privacy regulations will expand globally and will require a more localized implementation and storage," Bin said. "2021 witnessed the China Personal Information Protection Law (PIPL) adopted with astonishing speed, which further demonstrates this trend. The scope of the law will be clearer when the implementation regulations are enacted in 2022."

9. Scalable AI that can support the rapid growth of businesses and speed

Today's businesses amalgamate statistics, system architecture, machine learning, data analytics, data mining, and more. To achieve coherence, these components must be incorporated to create scalable and flexible models that can process large quantities of data on an internet-scale.

Scalable AI is defined as the ability of data models, algorithms, and the infrastructure to work at the speed, scale and complexity required for the job.

When we discuss managing and designing data structures, the ability to scale is a factor that adds up to solving the shortages and issues with collecting high-quality data. It is also used to improve the sustainability of data by using and recombining capabilities to expand across business problems.

The ability to scale in ML, as well as AI development, requires you to set production and deployment data pipelines, develop flexible system architectures, and adopt modern acquisition techniques to keep up-to-date while taking advantage of rapid developments within AI technologies.

Scalable algorithms and infrastructure pivots to bring the capabilities of AI to the most critical tasks, such as centralized data center capabilities distributed cloud-enabled and network-enabled apps for edge devices.

Although it appears so, scalable AI is not easy; it requires the alignment of various scalability components with the book of business to ensure enhanced technical performance, data security and integration challenges with data volumes and systems. Companies will require people to address these challenges and build a solid modeling workflow in 2022.

10. Predictive analysis can boost performance.

Companies are using analytics to enhance their performance and improve their experience. Predicting and planning for the future is an essential aspect of every business. Companies today require predictive models to forecast patterns and behaviors that can be applied to historical data.

For example, HR uses modeling to improve the retention of employees and enhance the efficiency of an organization. Stores are using data to forecast the patterns of customer purchases, such as that e-commerce is more popular than retail stores to gain a better understanding of customers on a greater and more personal level. The use of predictive analytics in marketing has become a new revolution.

In simple terms, the coming years will bring unlimited industries and businesses making use of advanced analytics for reaping the rewards by identifying future value customer behavior, creating more efficient products, and offering high-quality services that increase their profit.