A batch data pipeline is a structured and automated system designed to process large volumes of data at scheduled intervals or batches. It is distinct from real-time big data processing, which handles data as it arrives. This approach is particularly beneficial when immediate processing is not required. It is suitable for scenarios where database updates occur at predefined intervals.

Batch data pipelines are used in many different industries, including finance, retail, healthcare, and log analysis. They improve operational efficiency and make analytics simpler.

For example, consider a retail company analyzing its daily sales transactions. Instead of real-time analysis, which may not be essential for business needs, implementing a batch data pipeline allows the system to collect all sales data at the end of each business day and process it in one batch. This approach ensures efficient resource utilization. It enables complex analytical tasks, such as calculating daily revenue, identifying sales trends, and managing inventory.

A data pipeline is essentially a series of steps that move data from one location to another. It involves extraction, transformation, and loading (ETL) or extraction, loading, and transformation (ELT) processes. Popular tools for building data pipelines include Apache Kafka, Apache Airflow, and Apache NiFi. They offer frameworks for pipeline design, scheduling, monitoring, and maintaining data integrity and traceability.

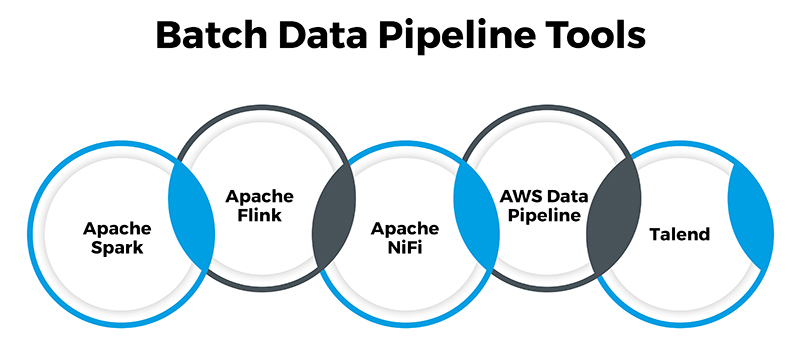

Batch Data Pipeline Tools

Selecting the right tools for a batch data pipeline is critical. They should align with project needs such as data volume, complexity, scalability, and data integration. Consider factors like ease of use, community support, and compatibility for an effective solution.

-

Apache Spark

Apache Spark is a widely used open-source framework for distributed data processing, offering libraries for batch processing, real-time streaming, machine learning, and graph processing. Its in-memory computing capabilities make it suitable for efficiently handling large-scale data transformations. -

Apache Flink

Apache Flink is another open-source stream processing framework that can also handle batch processing, offering low-latency and high-throughput capabilities. It is ideal for real-time analytics and complex event-driven processing. -

Apache NiFi

This one is a popular open-source data integration tool known for its user-friendly interface, making it easy to design complex data flows for tasks like data ingestion and routing. -

AWS Data Pipeline

This is a cloud-based service by Amazon Web Services (AWS) that simplifies the orchestration of data workflows, offering pre-built connectors for various AWS services. -

Talend

Talend is a comprehensive data integration and ETL platform with many data connectors and transformation tools, known for its user-friendly interface and reliability in developing data integration solutions.

These tools cater to diverse needs and perspectives. They offer a range of features to enhance batch data pipeline development and management.

Components of Batch Data Pipeline

The components of a batch data pipeline are fundamental building blocks that collectively process and move data in scheduled batches.

These components include:

- Data Sources: These are the origins of the data, such as databases, files, or APIs, from which the data is extracted for processing.

- Data Processing: This component involves cleaning, transforming, and enriching the data to make it usable for analysis or storage.

- Data Storage: Once processed, the data is stored in a data warehouse, database, or other storage systems for future use.

- Data Movement: This component involves moving the processed data from the processing stage to its storage destination.

- Data Validation: Ensuring the accuracy and quality of the data before it is processed or moved further along the pipeline.

- Workflow Orchestration: Managing the sequence of tasks and dependencies in the data pipeline to ensure efficient and timely processing.

- Monitoring and Logging: Keeping track of the data pipeline's performance and identifying any issues or bottlenecks for optimization.

- Error Handling: Managing and resolving errors that occur during the data processing or movement stages.

- Data Retention and Archiving: Determining how long the data should be retained and how it should be archived for future reference.

Understanding these components is crucial for designing and implementing an efficient batch data pipeline that meets the requirements of a data engineer working with big data, data warehousing, data integration, and databases.

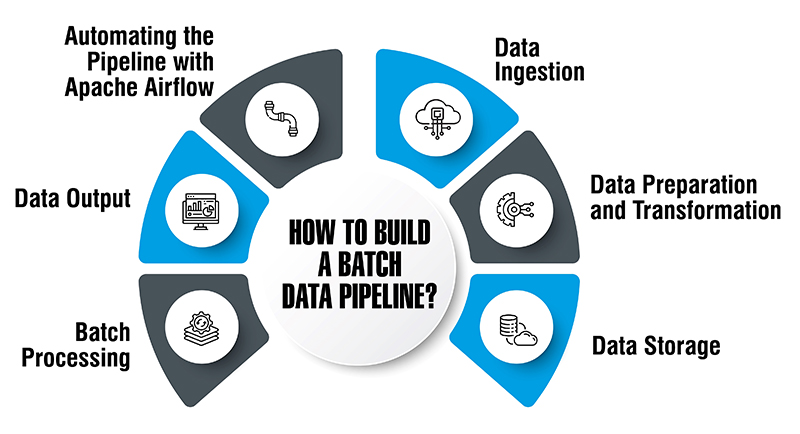

How to Build a Batch Data Pipeline?

Building a batch data pipeline is a strategic process that involves several critical steps. Each step is essential for ensuring the pipeline's efficiency and effectiveness. Let's explore these steps using the example of analyzing customer sales data for a retail company:

-

1) Data Ingestion

This initial step involves gathering and preparing data from various sources. For this example, let's assume the sales data is stored in CSV files. Data ingestion often requires the use of tools and scripts to extract data from databases, APIs, or external files. In this case, Python with the Pandas library can efficiently ingest the data. -

2) Data Preparation and Transformation

Once the data is ingested, it needs to be cleaned and prepared for analysis. This step, known as data transformation, involves filtering, aggregation, joining, or creating new features based on specific project requirements. For our sales data analysis example, we can calculate the total revenue for each product. -

3) Data Storage

After processing the raw data, it needs a destination for storage. In batch data pipelines, data is typically stored in databases, data lakes, or flat files. The choice of storage should align with the project's scalability and data retention requirements. For this example, the transformed data can be stored in a CSV file. -

4) Batch Processing

Batch processing is a crucial phase of a batch data pipeline, where data is processed in chunks or batches at scheduled intervals. Depending on the project objectives, batch processing may involve complex calculations, data enrichment, or summarization. Apache Spark, a popular distributed data processing framework, is well-suited for handling large-scale batch data transformations. In our example scenario, you can use Spark to aggregate sales data by month. -

5) Data Output

After processing the data with Spark, you may need to store or output the results. Spark offers various output options, such as writing to databases, Parquet files, or visualization tools. -

6) Automating the Pipeline with Apache Airflow

The final step involves automating the batch data pipeline using Apache Airflow. Airflow is a powerful workflow automation tool that allows you to schedule and orchestrate data pipeline tasks. You can create Airflow DAGs (Directed Acyclic Graphs) to define the workflow and dependencies. For example, you can schedule the pipeline to run daily at a specific time.

By following these steps, you can build a robust batch data pipeline for analyzing customer sales data or any other data processing needs. Each step plays a crucial role in ensuring the pipeline's efficiency, scalability, and reliability.

Batch Data Pipeline vs. Streaming Data Pipeline

Batch data pipelines and streaming data pipelines are two fundamental approaches to data processing. Each offers unique benefits and use cases.

Batch Data Pipeline

- Processing Model: Batch data pipelines process data in large, discrete batches at scheduled intervals.

- Use Cases: Ideal for scenarios where data latency is acceptable, such as generating daily reports or processing historical data.

- Advantages: Simplicity in implementation and cost-effectiveness, as real-time processing capabilities are not required.

- Example: Analyzing sales data at the end of each day to identify trends.

Streaming Data Pipeline

- Processing Model: Streaming data pipelines process data continuously as it arrives, enabling real-time or near-real-time analytics.

- Use Cases: Suitable for scenarios requiring low latency, such as fraud detection or real-time monitoring.

- Advantages: Enables faster decision-making and immediate responses to changing data.

- Example: Monitoring website traffic to detect and respond to anomalies in real-time.

Both approaches have their strengths and are applicable in different contexts. The choice between batch and streaming pipelines depends on the specific requirements of the use case, including the need for real-time processing, data latency tolerance, and the complexity of the data processing tasks involved. Understanding the differences between these two approaches is essential for designing effective data processing solutions.

Conclusion

Understanding batch data pipelines is crucial for any data engineer or professional working with large volumes of data. These pipelines offer a structured and efficient way to process data at scheduled intervals, making them ideal for scenarios where real-time processing is not necessary. By following the steps outlined in this guide, you can build a robust batch data pipeline for your organization's data processing needs.

Additionally, it's essential to consider the tools and technologies available for building batch data pipelines. Popular tools like Apache Spark, Apache Flink, and Apache NiFi offer powerful features for processing and managing data in batch pipelines. By selecting the right tools for your project's requirements, you can ensure the success of your batch data pipeline implementation.

Batch data pipelines play a crucial role in modern data processing workflows. By understanding their components, tools, and best practices, you can build efficient and scalable pipelines that meet your organization's data processing needs.